Table of Contents

Introduction

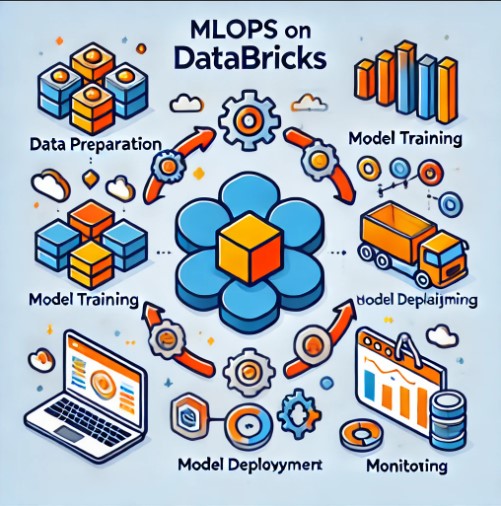

In the rapidly evolving landscape of data science, Machine Learning Operations (MLOps) has become crucial to managing, scaling, and automating machine learning workflows. Databricks, a unified data analytics platform, has emerged as a powerful tool for implementing MLOps, offering an integrated environment for data preparation, model training, deployment, and monitoring. This guide explores how to harness MLOps Databricks, covering fundamental concepts, practical examples, and advanced techniques to ensure scalable, reliable, and efficient machine learning operations.

What is MLOps?

MLOps, a blend of “Machine Learning” and “Operations,” is a set of best practices designed to bridge the gap between machine learning model development and production deployment. It incorporates tools, practices, and methodologies from DevOps, helping data scientists and engineers create, manage, and scale models in a collaborative and agile way. MLOps on Databricks, specifically, leverages the platform’s scalability, collaborative capabilities, and MLflow for effective model management and deployment.

Why Choose Databricks for MLOps?

Databricks offers several benefits that make it a suitable choice for implementing MLOps:

- Scalability: Supports large-scale data processing and model training.

- Collaboration: A shared workspace for data scientists, engineers, and stakeholders.

- Integration with MLflow: Simplifies model tracking, experimentation, and deployment.

- Automated Workflows: Enables pipeline automation to streamline ML workflows.

By choosing Databricks, organizations can simplify their ML workflows, ensure reproducibility, and bring models to production more efficiently.

Setting Up MLOps in Databricks

Step 1: Preparing the Databricks Environment

Before diving into MLOps on Databricks, set up your environment for optimal performance.

- Provision a Cluster: Choose a cluster configuration that fits your data processing and ML model training needs.

- Install ML Libraries: Databricks supports popular libraries such as TensorFlow, PyTorch, and Scikit-Learn. Install these on your cluster as needed.

- Integrate with MLflow: MLflow is built into Databricks, allowing easy access to experiment tracking, model management, and deployment capabilities.

Step 2: Data Preparation

Data preparation is fundamental for building successful ML models. Databricks provides several tools for handling this efficiently:

- ETL Pipelines: Use Databricks to create ETL (Extract, Transform, Load) pipelines for data processing and transformation.

- Data Versioning: Track different versions of data to ensure model reproducibility.

- Feature Engineering: Transform raw data into meaningful features for your model.

Building and Training Models on Databricks

Once data is prepared, the next step is model training. Databricks provides various methods for building models, from basic to advanced.

Basic Model Training

For beginners, starting with Scikit-Learn is a good choice for building basic models. Here’s a quick example:

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

# Split data

X_train, X_test, y_train, y_test = train_test_split(data, labels, test_size=0.2)

# Train model

model = LogisticRegression()

model.fit(X_train, y_train)

# Evaluate model

accuracy = accuracy_score(y_test, model.predict(X_test))

print("Model Accuracy:", accuracy)Advanced Model Training with Hyperparameter Tuning

Databricks integrates with Hyperopt, a Python library for hyperparameter tuning, to improve model performance.

from hyperopt import fmin, tpe, hp, Trials

from hyperopt.pyll.base import scope

def objective(params):

model = LogisticRegression(C=params['C'])

model.fit(X_train, y_train)

accuracy = accuracy_score(y_test, model.predict(X_test))

return {'loss': -accuracy, 'status': STATUS_OK}

space = {

'C': hp.uniform('C', 0.001, 1)

}

trials = Trials()

best_params = fmin(objective, space, algo=tpe.suggest, max_evals=100, trials=trials)

print("Best Parameters:", best_params)

This script finds the best C parameter for logistic regression by trying different values, automating the hyperparameter tuning process.

Model Deployment on Databricks

Deploying a model is essential for bringing machine learning insights to end users. Databricks facilitates both batch and real-time deployment methods.

Batch Inference

In batch inference, you process large batches of data at specific intervals. Here’s how to set up a batch inference pipeline on Databricks:

- Register Model with MLflow: Save the trained model in MLflow to manage versions.

- Create a Notebook Job: Schedule a job on Databricks to run batch inferences periodically.

- Save Results: Store the results in a data lake or warehouse.

Real-Time Deployment with Databricks and MLflow

For real-time applications, you can deploy models as REST endpoints. Here’s a simplified outline:

- Create a Databricks Job: Deploy the model as a Databricks job.

- Set Up MLflow Model Serving: MLflow allows you to expose your model as an API endpoint.

- Invoke the API: Send requests to the API for real-time predictions.

Monitoring and Managing Models

Model monitoring is a critical component of MLOps. It ensures the deployed model continues to perform well.

Monitoring with MLflow

MLflow can be used to track key metrics, detect drift, and log errors.

- Track Metrics: Record metrics like accuracy, precision, and recall in MLflow to monitor model performance.

- Drift Detection: Monitor model predictions over time to detect changes in data distribution.

- Alerts and Notifications: Set up alerts to notify you of significant performance drops.

Retraining and Updating Models

When a model’s performance degrades, retraining is necessary. Databricks automates model retraining with scheduled jobs:

- Schedule a Retraining Job: Use Databricks jobs to schedule periodic retraining.

- Automate Model Replacement: Replace old models in production with retrained models using MLflow.

FAQ: MLOps on Databricks

What is MLOps on Databricks?

MLOps on Databricks involves using the Databricks platform for scalable, collaborative, and automated machine learning workflows, from data preparation to model monitoring and retraining.

Why is Databricks suitable for MLOps?

Databricks integrates with MLflow, offers scalable compute, and has built-in collaborative tools, making it a robust choice for MLOps.

How does MLflow enhance MLOps on Databricks?

MLflow simplifies experiment tracking, model management, and deployment, providing a streamlined workflow for managing ML models on Databricks.

Can I perform real-time inference on Databricks?

Yes, Databricks supports real-time inference by deploying models as API endpoints using MLflow’s Model Serving capabilities.

How do I monitor deployed models on Databricks?

MLflow on Databricks allows you to track metrics, detect drift, and set up alerts to monitor deployed models effectively.

Conclusion

Implementing MLOps on Databricks transforms how organizations handle machine learning models, providing a scalable and collaborative environment for data science teams. By leveraging tools like MLflow and Databricks jobs, businesses can streamline model deployment, monitor performance, and automate retraining to ensure consistent, high-quality predictions. As machine learning continues to evolve, adopting platforms like Databricks will help data-driven companies remain agile and competitive.

For more information on MLOps, explore Microsoft’s MLOps guide and MLflow documentation on Databricks to deepen your knowledge. Thank you for reading the DevopsRoles page!