Table of Contents

Introduction

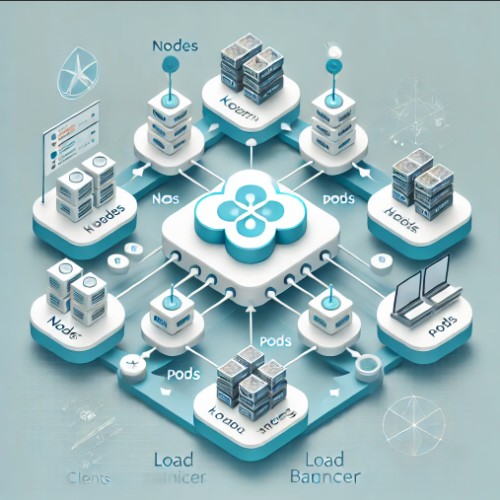

Kubernetes has revolutionized the way modern applications are deployed and managed. Among its many features, Kubernetes load balancing stands out as a critical mechanism for ensuring that application traffic is efficiently distributed across containers, enhancing scalability, availability, and performance. Whether you’re managing a microservices architecture or deploying a high-traffic web application, understanding Kubernetes load balancing is essential.

In this article, we’ll delve into the fundamentals of Kubernetes load balancing, explore its types, and provide practical examples to help you leverage this feature effectively.

What Is Kubernetes Load Balancing?

Kubernetes load balancing refers to the process of distributing network traffic across multiple pods or services in a Kubernetes cluster. It ensures that application workloads are evenly spread, preventing overloading of any single pod and improving system resilience.

Why Is Load Balancing Important?

- Scalability: Efficiently manage increasing traffic.

- High Availability: Reduce downtime by rerouting traffic to healthy pods.

- Performance Optimization: Minimize latency by balancing requests.

- Fault Tolerance: Automatically redirect traffic away from failing components.

Types of Kubernetes Load Balancing

1. Internal Load Balancing

Internal load balancing occurs within the Kubernetes cluster. It manages traffic between services and pods.

Examples:

- Service-to-Service communication.

- Redistributing traffic among pods in a Deployment.

2. External Load Balancing

External load balancing handles traffic from outside the Kubernetes cluster, directing it to appropriate services within the cluster.

Examples:

- Exposing a web application to external users.

- Managing client requests through a cloud-based load balancer.

3. Client-Side Load Balancing

In this approach, the client directly determines which pod to send requests to, typically using libraries like gRPC.

4. Server-Side Load Balancing

Here, the server-or Kubernetes service-manages the distribution of requests among pods.

Key Components of Kubernetes Load Balancing

1. Services

Kubernetes Services abstract pod endpoints and provide stable networking. Types include:

- ClusterIP: Default, internal-only access.

- NodePort: Exposes service on each node’s IP.

- LoadBalancer: Integrates with external cloud load balancers.

2. Ingress

Ingress manages HTTP and HTTPS traffic routing, providing advanced load balancing features like TLS termination and path-based routing.

3. Endpoints

Endpoints map services to specific pod IPs and ports, forming the backbone of traffic routing.

Implementing Kubernetes Load Balancing

1. Setting Up a ClusterIP Service

ClusterIP is the default service type for internal load balancing.

Example:

apiVersion: v1

kind: Service

metadata:

name: my-clusterip-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: ClusterIPThis configuration distributes internal traffic among pods labeled app: my-app.

2. Configuring a NodePort Service

NodePort exposes a service to external traffic.

Example:

apiVersion: v1

kind: Service

metadata:

name: my-nodeport-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

nodePort: 30001

type: NodePortThis allows access via <NodeIP>:30001.

3. Using a LoadBalancer Service

LoadBalancer integrates with cloud providers for external load balancing.

Example:

apiVersion: v1

kind: Service

metadata:

name: my-loadbalancer-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancerThis setup creates a cloud-based load balancer and routes traffic to the appropriate pods.

4. Configuring Ingress for HTTP/HTTPS Routing

Ingress provides advanced traffic management.

Example:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 80This configuration routes example.com traffic to my-service.

Best Practices for Kubernetes Load Balancing

- Use Labels and Selectors: Ensure accurate traffic routing.

- Monitor Load Balancers: Use tools like Prometheus for observability.

- Configure Health Checks: Detect and reroute failing pods.

- Optimize Autoscaling: Combine load balancing with Horizontal Pod Autoscaler (HPA).

- Secure Ingress: Implement TLS/SSL for encrypted communication.

FAQs

1. What is the difference between NodePort and LoadBalancer?

NodePort exposes a service on each node’s IP, while LoadBalancer integrates with external cloud load balancers to provide a single IP address for external access.

2. Can Kubernetes load balancing handle SSL termination?

Yes, Kubernetes Ingress can terminate SSL/TLS connections, simplifying secure communication.

3. How does Kubernetes handle failover?

Kubernetes automatically reroutes traffic away from unhealthy pods using health checks and endpoint updates.

4. What tools can enhance load balancing?

Tools like Traefik, NGINX Ingress Controller, and HAProxy provide advanced features for Kubernetes load balancing.

5. Is manual intervention required for scaling?

No, Kubernetes autoscaling features like HPA dynamically adjust pod replicas based on traffic and resource usage.

External Resources

Conclusion

Kubernetes load balancing is a cornerstone of application performance and reliability. By understanding its mechanisms, types, and implementation strategies, you can optimize your Kubernetes deployments for scalability and resilience. Explore further with hands-on experimentation to unlock its full potential for your applications. Thank you for reading the DevopsRoles page!